Currently I’m working with a cloud based service where we are exploiting available data about addresses, business entities and consumers/citizens from all over the world.

The cost of such data varies a lot around the world.

In Denmark, where the product is born, the costs of such data are relatively low. The joys of the welfare state also apply to access to open public sector data as reported in the post The Value of Free Address Data. Also you are able to check the identity of an individual in the citizen hub. Doing it online on a green screen you will be charged (what resembles) 50 cent, but doing it with cloud service brokerage, like in iDQ™, it will only cost you 5 cent.

In the United Kingdom the prices for public sector data about addresses, business entities and citizens are still relatively high. The Royal Mail has a license tag on the PAF file even for government bodies. Ordnance Survey is given the rest of AddressBase free for the public sector, but there is a big tag for the rest of the society. The electoral roll has a price tag too even if the data quality isn’t considered for other uses than the intended immediate purpose of use as told in the post Inaccurately Accurate.

At the moment I’m looking into similar services for the United States and a lot of other countries. Generally speaking you can get your hands on most data for a price, and the prices have come down since I checked the last time. Also there is a tendency of lowering or abandoning the price for the most basic data as names and addresses and other identification data.

As poor data quality in contact data is a big cost for most enterprises around the world, the news of decreasing prices for big reference data is good news.

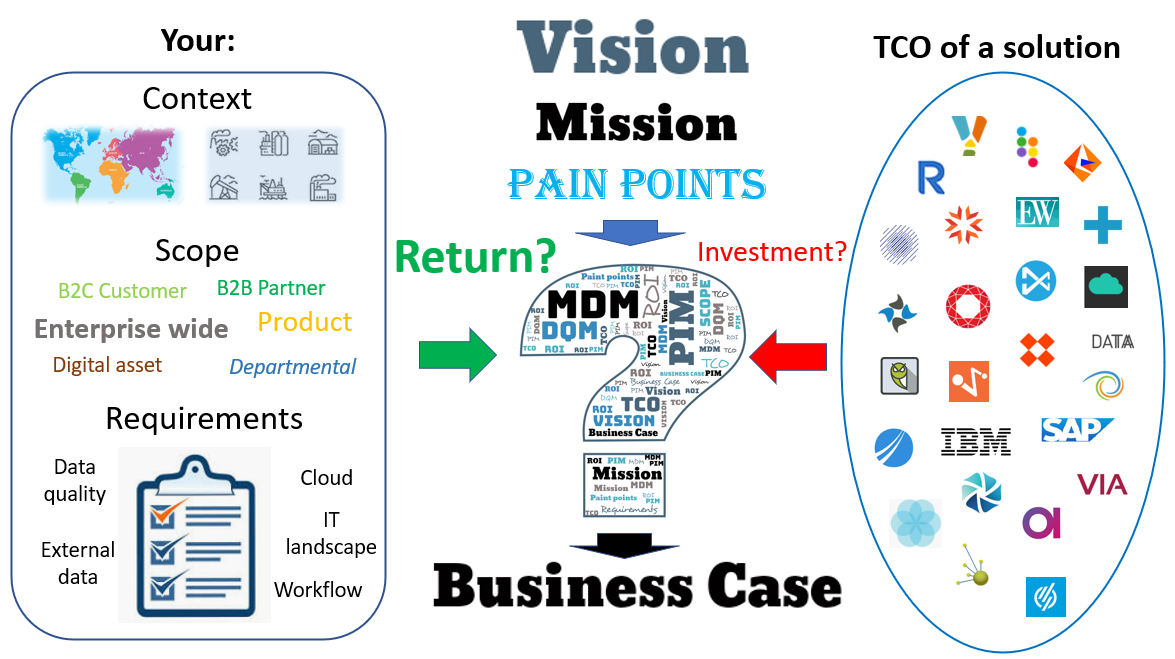

However, if you are doing business internationally it is a daunting task to keep up with where to find the best and most cost effective big reference data sources for contact data and not at least how to use the sources in business processes.

Wednesday the 25th July I’m giving a presentation, in the cloud, on how iDQ™ comes to the rescue. More information on DataQualityPro.

56.085053

12.439756