I am sad to hear that Larry English has passed away as I learned from this LinkedIn update by C. Lwanga Yonke.

As said in here: “When the story of Information Quality Management is written, the first sentence of the first paragraph will include the name Larry English”.

Larry pioneered the data quality – or information quality as he preferred to coin it – discipline.

He was an inspiration to many data and information quality practitioners back in the 90’s and 00’s, including me, and he paved the way for bringing this topic to the level of awareness that it has today.

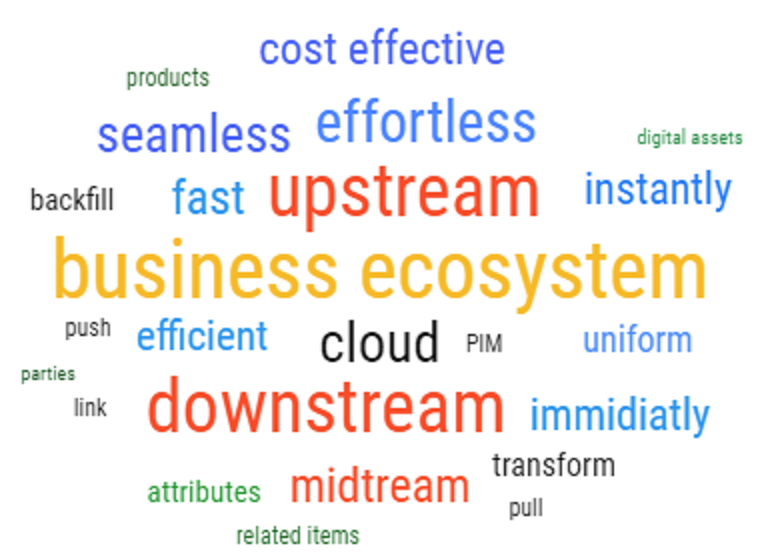

In his teaching Larry emphasized on the simple but powerful concepts which are the foundation of data quality and information quality methodologies:

- Quantify the costs and lost opportunities of bad information quality

- Always look for the root cause of bad information quality

- Observe the plan-do-check-act circle when solving the information quality issues

Let us roll up our sleeves and continue what Larry started.

Even the Romans knew this as Seneca the Younger said that “errare humanum est” which translates to “to err is human”. He also added “but to persist in error is diabolical”.

Even the Romans knew this as Seneca the Younger said that “errare humanum est” which translates to “to err is human”. He also added “but to persist in error is diabolical”.

Oftentimes it still takes a human eye to establish if a number, year, term or other piece of information is wrong.

Oftentimes it still takes a human eye to establish if a number, year, term or other piece of information is wrong.