One of the big news this week was the detection of gravitational waves. The big thing about this huge step in science is that we now will be able to see things in space, we could not see before. These are things we have plenty of clues about, but we cannot measure them because they do not emit electromagnetic radiation and the light from them is absorbed or reflected by cosmic bodies or dust before it reaches our telescopes.

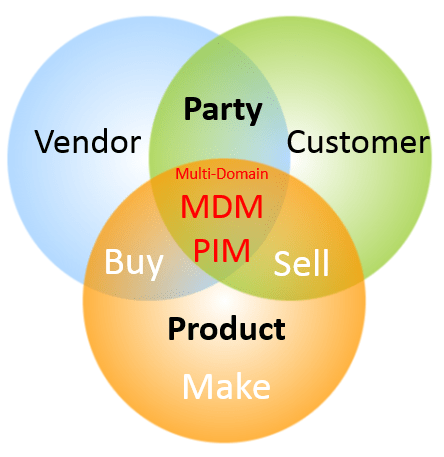

We have kind of the same in the MDM (Master Data Management) world. We know that there is such a thing called multi-domain Master Data Management but our biggest telescope, the Gartner magic quadrants, only until now clearly identified customer Master Data Management and product Master Data Management as latest touched in the post The Perhaps Second Most Important MDM Quadrant 2015 is Out.

Indeed, many MDM programmes that actually does encompass all MDM domains do split the efforts into traditional domains as customer, vendor and product with separate teams observing their part of the sky. It takes a lot to advocate for that despite vendors belongs to the buy side and customers belongs to the sell side of the organization, there are strong ties between these objects. We can detect gravity in terms of that a vendor and a customer can be the same real world entity and vendors and customers have the same basic structure being a party.

Products do behave differently depending on the industry where your organization belongs. You may make products utilizing raw materials you buy and transform into finished products you sell or/and you may buy and sell the same physical product as a distributor, retailer or other value adding node in the supply chain. In order to handle the drastic increased demand for product data related to eCommerce, PIM (Product Information Management) has been known for long and many organizations everywhere in supply chains have already established PIM capabilities inside their organization with or without and inside or outside product Master Data Management.

What we still need to detect is a good system for connecting the PIM portion of sell sides upstream and buy sides downstream in supply chains. Right now we only see a blurred galaxy of spreadsheets as examined in the post Excellence vs Excel.

55.695443

12.578939

A pearl is a popular gemstone. Natural pearls, meaning they have occurred spontaneously in the wild, are very rare. Instead, most are farmed in fresh water and therefore by regulation used in many countries must be referred to as cultured freshwater pearls.

A pearl is a popular gemstone. Natural pearls, meaning they have occurred spontaneously in the wild, are very rare. Instead, most are farmed in fresh water and therefore by regulation used in many countries must be referred to as cultured freshwater pearls. When selecting an identifier there are different options as national IDs, LEI, DUNS Number and others as explained in the post

When selecting an identifier there are different options as national IDs, LEI, DUNS Number and others as explained in the post

An important part of implementing Master Data Management (MDM) is to capture the business rules that exists within the implementing organization and build those rules into the solution. In addition, and maybe even more important, is the quest of crafting new business rules that helps making master data being of more value to the implementing organization.

An important part of implementing Master Data Management (MDM) is to capture the business rules that exists within the implementing organization and build those rules into the solution. In addition, and maybe even more important, is the quest of crafting new business rules that helps making master data being of more value to the implementing organization.

While the innovators and early adopters are fighting with big data quality the late majority are still trying get the heads around how to manage small data. And that is a good thing, because you cannot utilize big data without solving small data quality problems not at least around master data as told in the post

While the innovators and early adopters are fighting with big data quality the late majority are still trying get the heads around how to manage small data. And that is a good thing, because you cannot utilize big data without solving small data quality problems not at least around master data as told in the post  Solving data quality problems is not just about fixing data. It is very much also about fixing the structures around data as explained in a post, featuring the pope, called

Solving data quality problems is not just about fixing data. It is very much also about fixing the structures around data as explained in a post, featuring the pope, called  A common roadblock on the way to solving data quality issues is that things that what are everybody’s problem tends to be no ones problem. Implementing a data governance programme is evolving as the answer to that conundrum. As many things in life data governance is about to think big and start small as told in the post

A common roadblock on the way to solving data quality issues is that things that what are everybody’s problem tends to be no ones problem. Implementing a data governance programme is evolving as the answer to that conundrum. As many things in life data governance is about to think big and start small as told in the post  Data governance revolves a lot around peoples roles and there are also some specific roles within data governance. Data owners have been known for a long time, data stewards have been around some time and now we also see Chief Data Officers emerge as examined in the post

Data governance revolves a lot around peoples roles and there are also some specific roles within data governance. Data owners have been known for a long time, data stewards have been around some time and now we also see Chief Data Officers emerge as examined in the post

If we look at customer, or rather party, Master Data Management (MDM) it is much about real world alignment. In party master data management you describe entities as persons and legal entities in the real world and you should have descriptions that reflect the current state (and sometimes historical states) of these entities. Some reflections will be

If we look at customer, or rather party, Master Data Management (MDM) it is much about real world alignment. In party master data management you describe entities as persons and legal entities in the real world and you should have descriptions that reflect the current state (and sometimes historical states) of these entities. Some reflections will be