A year ago I wrote a blog post about data matching published on the Informatica Perspective blog. The post was called Five Future Data Matching Trends.

One of the trends mentioned is hierarchical data matching.

One of the trends mentioned is hierarchical data matching.

The reason we need what may be called hierarchical data matching is that more and more organizations are looking into master data management and then they realize that the classic name and address matching rules do not necessarily fit when party master data are going to be used for multiple purposes. What constitutes a duplicate in one context, like sending a direct mail, doesn’t necessary make a duplicate in another business function and vice versa. Duplicates come in hierarchies.

One example is a household. You probably don’t want to send two sets of the same material to a household, but you might want to engage in a 1-to-1 dialogue with the individual members. Another example is that you might do some very different kinds of business with the same legal entity. Financial risk management is the same, but different sales or purchase processes may require very different views.

I usually divide a data matching process into three main steps:

- Candidate selection

- Match scoring

- Match destination

(More information on the page: The Art of Data Matching)

Hierarchical data matching is mostly about the last step where we apply survivorship rules and execute business rules on whether to purge, merge, split or link records.

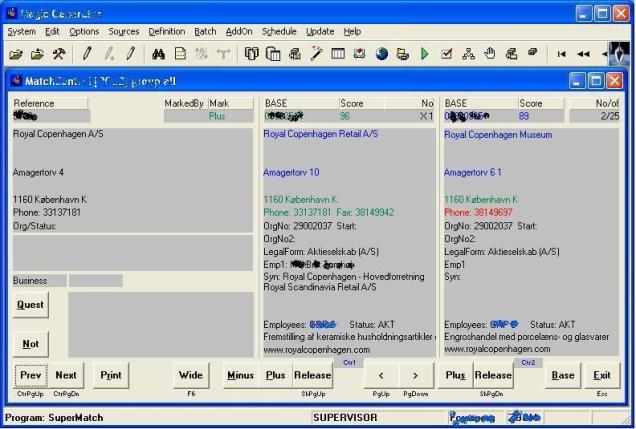

In my experience there are a lot of data matching tools out there capable of handling candidate selection, match scoring, purging records and in some degree merging records. But solutions are sparse when it comes to more sophisticated things like spitting an original entity into two or more entities by for example Splitting Names or linking records in hierarchies in order to build a Hierarchical Single Source of Truth.

This spring Jim Harris made a brilliant series of articles on DataQualityPro on the subject of identifying duplicate customers ending with

This spring Jim Harris made a brilliant series of articles on DataQualityPro on the subject of identifying duplicate customers ending with