Mid next month iDQ will move our London office to a new address:

iDQ A/S

2nd Floor

Berkeley Square House

Berkeley Square

London

W1J 6BD

United Kingdom

It’s a good old English address including a lot of lines on an envelope.

The address could be either shorter or longer.

The address below will in fact be enough to have a letter delivered:

iDQ A/S

2nd Floor

W1J 6BD

UK

Due to the granular UK postal code system a single post code may either be a single address a part of a long road or a small street.

This structure is also what is exploited in what is called rapid addressing, where you only type in the need data and the rest is supplied by a (typically cloud) service.

But sometimes people want their addresses presented in a different way than the official way. Maybe I want our address to be:

iDQ A/S

2nd Floor

Berkeley Square House

Berkeley Square

Mayfair

London

W1J 6BD

United Kingdom

Mayfair is a nice part of London. Insisting in including this element in the address is an example of vanity addressing.

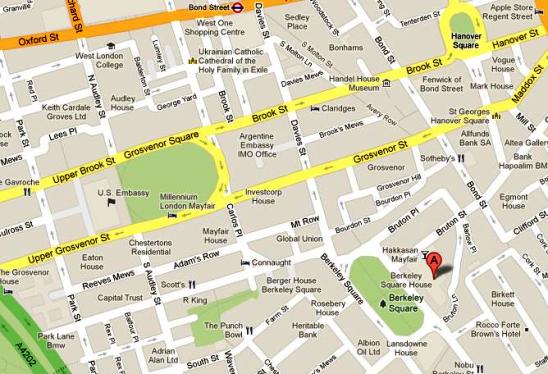

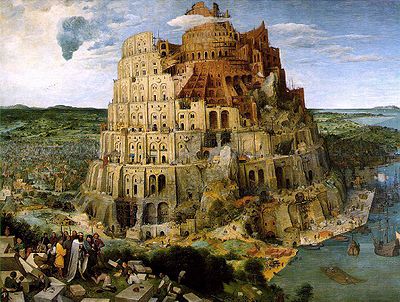

Here’s the map of the area:

Notice the place in the upper right corner of the Google Map: Apple Store Regent Street. With an icon with a bed. This means it’s a hotel. Is the Apple Store really a hotel? No – except for some while ago when people slept in front of the store waiting for a product with a notable map service as reported by Richard Northwood (aka The Data Geek) in the post Data Quality Failure – Apple Style.

Well Google, you can’t win them all.