One of the things that data quality tools does is data matching. Data matching is mostly related to the party master data domain. It is about comparing two or more data records that does not have exactly the same data but are describing the same real world entity.

Common approaches for that is to compare data records in internal master data repositories within your organization. However, there are great advantages in bringing in external reference data sources to support the data matching.

Some of the ways to do that I have worked with includes these kind of big reference data:

Business directories:

Business directories:

The business-to-business (B2B) world does not have privacy issues in the degree we see in the business-to-consumer (B2C) world. Therefore there are many business directories out there with a quite complete picture of which business entities exists in a given country and even in regions and the whole world.

A common approach is to first match your internal B2B records against a business directory and obtain a unique key for each business entity. The next step of matching business entities with that unique is a no brainer.

The problem is though that an automatic match between internal B2B records and a business directory most often does not yield a 100 % hit rate. Not even close as examined in the post 3 out of 10.

Address directories:

Address directories are mostly used in order to standardize postal address data, so that two addresses in internal master data that can be standardized to an address written in exactly the same way can be better matched.

A deeper use of address directories is to exploit related property data. The probability of two records with “John Smith” on the same address being a true positive match is much higher if the address is a single-family house opposite to a high-rise building, nursery home or university campus.

Relocation services:

A common cause of false negatives in data matching is that you have compared two records where one of the postal addresses is an old one.

Bringing in National Change of Address (NCOA) services for the countries in question will help a lot.

The optimal way of doing that (and utilizing business and address directories) is to make it a continuous element of Master Data Management (MDM) as explored in the post The Relocation Event.

Business directories:

Business directories: There are arguments for and against both approaches. The probably most used argument against the MDM hub approach is that why you should solve the issue of having X data silos with creating data silo X + 1. The argument against naming a given application as the place of master data is that an application is built for a specific purpose and therefore is not good for other purposes of master data use.

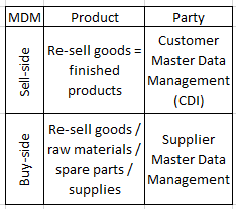

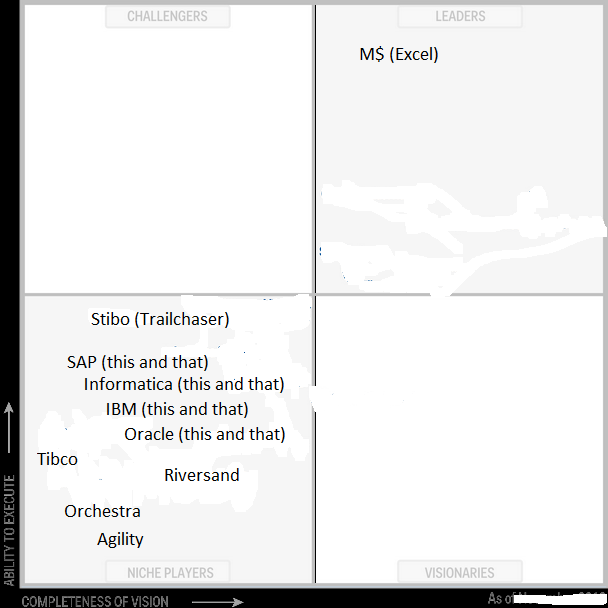

There are arguments for and against both approaches. The probably most used argument against the MDM hub approach is that why you should solve the issue of having X data silos with creating data silo X + 1. The argument against naming a given application as the place of master data is that an application is built for a specific purpose and therefore is not good for other purposes of master data use. Much of the talking and doing related to Master Data Management (MDM) today revolves around the master data repository being the central data store for information about customers, suppliers and other parties, products, locations, assets and what else are regarded as master data entities.

Much of the talking and doing related to Master Data Management (MDM) today revolves around the master data repository being the central data store for information about customers, suppliers and other parties, products, locations, assets and what else are regarded as master data entities.