The differences between a data warehouse and a data lake has been discussed a lot as for example here and here.

To summarize, the main point in my eyes is: In a data warehouse the purpose and structure is determined before uploading data while the purpose with and structure of data can be determined before downloading data from a data lake. This leads to that a data warehouse is characterized by rigidity and a data lake is characterized by agility.

Agility is a good thing, but of course, you have to put some control on top of it as reported in the post Putting Context into Data Lakes.

Agility is a good thing, but of course, you have to put some control on top of it as reported in the post Putting Context into Data Lakes.

Furthermore, there are some great opportunities in extending the use of the data lake concept beyond the traditional use of a data warehouse. You should think beyond using a data lake within a given organization and vision how you can share a data lake within your business ecosystem. Moreover, you should consider not only using the data lake for analytical purposes but commence on a mission to utilize a data lake for operational purposes.

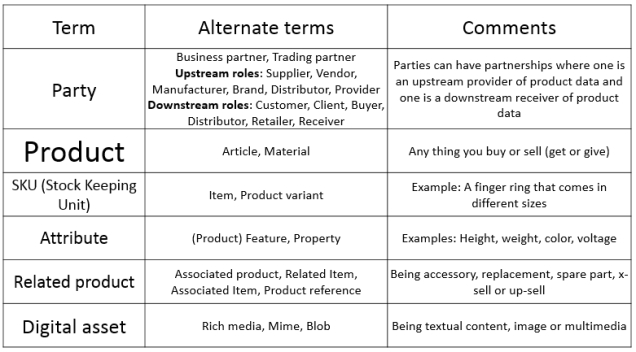

The venture I am working on right now have this second take on a data lake. The Product Data Lake exists in the context of sharing product information between trading partners in an agile and process driven way. The providers of product information, typically manufacturers and upstream distributors, uploads product information according to the data management maturity level of that organization. This information may very well for now be stored according to traditional data warehouse principles. The receivers of product information, typically downstream distributors and retailers, download product information according to the data management maturity level of that organization. This information may very well for now end up in a data store organized by traditional data warehouse principles.

As I have seen other approaches for sharing product information between trading partners these solutions are built on having a data warehouse like solution between trading partners with a high degree of consensus around purpose and structure. Such solutions are in my eyes only successful when restricted narrowly in a given industry probably within a given geography for a given span of time.

By utilizing the data lake concept in the exchange zone between trading partners you can share information according to your own pace of maturing in data management and take advantage of data sharing where it fits in your roadmap to digitalization. The business ecosystems where you participate are great sources of data for both analytical and operational purposes and we cannot wait until everyone agrees on the same purpose and structure. It only takes two to start the tango.

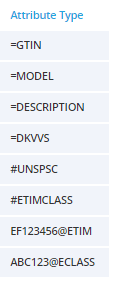

The Product Data Lake tagging approach

The Product Data Lake tagging approach

But the market analysis and the trends observed is good stuff as well.

But the market analysis and the trends observed is good stuff as well. In my eyes we need solutions build on the data lake concept if we want business agility – and we do want that. But I also believe that we need to put data in data lakes in context.

In my eyes we need solutions build on the data lake concept if we want business agility – and we do want that. But I also believe that we need to put data in data lakes in context.

Using third party data for customer and supplier master data seems to be a very good idea as exemplified in the post

Using third party data for customer and supplier master data seems to be a very good idea as exemplified in the post