This blog has earlier had some December blog posts about how Santa Claus deals with data quality (Santa Quality) and master data management (Multi-Domain MDM Santa Style).

This blog has earlier had some December blog posts about how Santa Claus deals with data quality (Santa Quality) and master data management (Multi-Domain MDM Santa Style).

As I like to be on the top of the hype curve I was preparing a post about how Santa digs into big data, including social data streams, to be better at finding out who is nice and who is naughty and what they really want for Christmas. But then I suddenly had a light bulb moment saying: Wait, why don’t you take your own medicine and look up who that Santa guy really is?

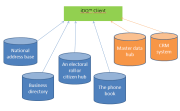

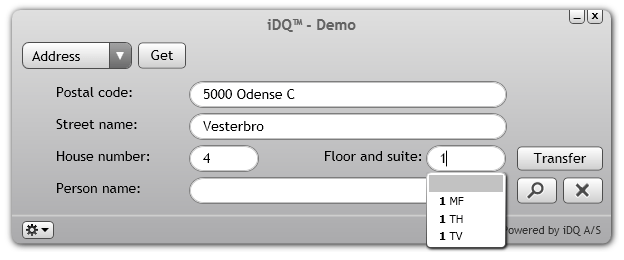

Starting in social media checking twitter accounts was shocking. All profiles are fake. FaceBook, Linkedin and other social networks all turned out having no real Santa Claus. Going to commercial third party directories and open government data had the same result. No real Santa Claus there. Some address directories had a postal code with a relation like the postcode “H0 H0 H0” in Canada and “SAN TA1” in the UK, but they seem to kind of fake too.

Starting in social media checking twitter accounts was shocking. All profiles are fake. FaceBook, Linkedin and other social networks all turned out having no real Santa Claus. Going to commercial third party directories and open government data had the same result. No real Santa Claus there. Some address directories had a postal code with a relation like the postcode “H0 H0 H0” in Canada and “SAN TA1” in the UK, but they seem to kind of fake too.

So, shifting from relying on the purpose of use to real world alignment I have concluded that Santa Claus doesn’t exist and therefore he can’t have a data store looking like a toy elephant or any other big data operations going on.

Also I won’t, based on the above instant data quality mash up, register Santa Claus (Inc.) as a prospective customer in my CRM system. Sorry.