Every organization needs Master Data Management (MDM). But does every organization need a MDM tool?

In many ways the MDM tools we see on the market resembles common database tools. But there are some things the MDM tools do better than a common database management tool. The post called The Database versus the Hub outlines three such features being:

- Controlling hierarchical completeness

- Achieving a Single Business Partner View

- Exploiting Real World Awareness

Controlling hierarchical completeness and achieving a single business partner view is closely related to the two things data quality tools do better than common database systems as explained in the post Data Quality Tools Revealed. These two features are:

- Data profiling and

- Data matching

Specialized data profiling tools are very good at providing out-of-the-box functionality for statistical summaries and frequency distributions for the unique values and formats found within the fields of your data sources in order to measure data quality and find critical areas that may harm your business. These capabilities are often better and easier to use than what you find inside a MDM tool. However, in order to measure the improvement in a business context and fix the problems not just in a one-off you need a solid MDM environment.

When it comes to data matching we also still see specialized solutions that are more effective and easier to use than what is typically delivered inside MDM solutions. Besides that, we also see business scenarios where it is better to do the data matching outside the MDM platform as examined in the post The Place for Data Matching in and around MDM.

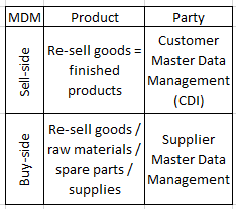

Looking at the single MDM domains we also see alternatives. Customer Relation Management (CRM) systems are popular as a choice for managing customer master data. But as explained in the post CRM systems and Customer MDM: CRM systems are said to deliver a Single Customer View but usually they don’t. The way CRM systems are built, used and integrated is a certain track to create duplicates. Some remedies for that are touched in the post The Good, Better and Best Way of Avoiding Duplicates.

With product master data we also have Product Information Management (PIM) solutions. From what I have seen PIM solutions has one key capability that is essentially different from a common database solution and how many MDM solutions, that are built with party master data in mind, has. That is a flexible and super user angled way of building hierarchies and assigning attributes to entities – in this case particularly products. If you offer customer self-service, like in eCommerce, with products that have varying attributes you need PIM functionality. If you want to do this smart, you need a collaboration environment for supplier self-service as well as pondered in the post Chinese Whispers and Data Quality.

With product master data we also have Product Information Management (PIM) solutions. From what I have seen PIM solutions has one key capability that is essentially different from a common database solution and how many MDM solutions, that are built with party master data in mind, has. That is a flexible and super user angled way of building hierarchies and assigning attributes to entities – in this case particularly products. If you offer customer self-service, like in eCommerce, with products that have varying attributes you need PIM functionality. If you want to do this smart, you need a collaboration environment for supplier self-service as well as pondered in the post Chinese Whispers and Data Quality.

All in all the necessary components and combinations for a suitable MDM toolbox are plentiful and can be obtained by one-stop-shopping or by putting some best-of-breed solutions together.

So this year it may be the time to have a closer look at big data quality, Santa style, meaning how we can imagine Santa Claus is joining the raise of big data while observing that exploiting data, big or small, is only going to add real value if you believe in data quality. Ho ho ho.

So this year it may be the time to have a closer look at big data quality, Santa style, meaning how we can imagine Santa Claus is joining the raise of big data while observing that exploiting data, big or small, is only going to add real value if you believe in data quality. Ho ho ho.

The founder of The Matching Institute is Alexandra Duplicado. Aleksandra says: “The reason I founded The Institute of Data Matching is that I am sick and tired of receiving duplicate letters with different spellings of my name and address”. Alex is also pleased about, that she now have found a nice office in edit distance of her home.

The founder of The Matching Institute is Alexandra Duplicado. Aleksandra says: “The reason I founded The Institute of Data Matching is that I am sick and tired of receiving duplicate letters with different spellings of my name and address”. Alex is also pleased about, that she now have found a nice office in edit distance of her home.

While MDM solutions since then have been picking up on the share of the data matching being done around it is still a fairly small proportion of data matching that is performed within MDM solutions. Even if you have a MDM solution with data matching capabilities, you might still consider where data matching should be done. Some considerations I have come across are:

While MDM solutions since then have been picking up on the share of the data matching being done around it is still a fairly small proportion of data matching that is performed within MDM solutions. Even if you have a MDM solution with data matching capabilities, you might still consider where data matching should be done. Some considerations I have come across are: Legal Form in Company Names

Legal Form in Company Names