In a recent post here on the blog the benefits of instant data enrichment was discussed.

In the contact data capture context these are some examples:

- Getting a standardized address at contact data entry makes it possible for you to easily link to sources with geo codes, property information and other location data.

- Obtaining a company registration number or other legal entity identifier (LEI) at data entry makes it possible to enrich with a wealth of available data held in public and commercial sources.

- Having a person’s name spelled according to available sources for the country in question helps a lot with typical data quality issues as uniqueness and consistency.

However, if you are doing business in many countries it is a daunting task to connect with the best of breed sources of big reference data. Add to that, that many enterprises are doing both business-to-business (B2B) and business-to-consumer (B2C) activities including interacting with small business owners. This means you have to link to the best sources available for addresses, companies and individuals.

A solution to this challenge is using Cloud Service Brokerage (CSB).

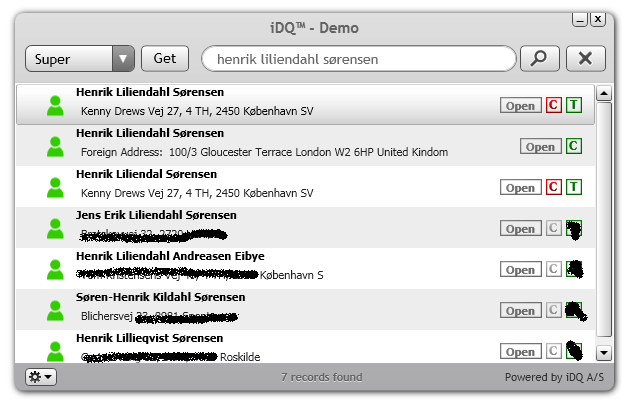

An example of a Cloud Service Brokerage suite for contact data quality is the instant Data Quality (iDQ™) service I’m working with right now.

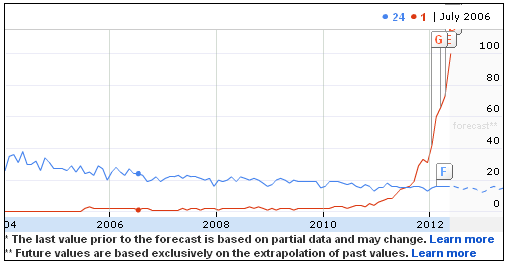

This service can connect to big reference data cloud services from all over the world. Some services are open data services in the contact data realm, some are international commercial directories, some are the wealth of national reference data services for addresses, companies and individuals and even social network profiles are on the radar.