During the last couple of years I have been talking about social MDM (Social Master Data Management) on this blog.

During the last couple of years I have been talking about social MDM (Social Master Data Management) on this blog.

MDM (Master Data Management) mainly consists of two disciplines: CDI (Customer Data Integration) and PIM (Product Information Management).

With social MDM most of the talk have been around CDI as the integration of social network profiles with traditional customer (or party) master data.

But there is also a PIM side of social MDM.

Making product data lively

The other day Kimmo Kontra had a blog post called With Tiger’s clubs, you’ll golf better – and what it means to Product Information Management. Herein Kimmo examines how stories around products help with selling products. Kimmo concludes that within master data management there is going to be a need for storing and managing stories.

I agree. And having stories related to your products and services is a must for social selling. Besides having the right hard facts about products consistent across multiple channels, and having the right images and other rich media consistent as well, you will also need to include the right and consistent stories when the multiple channels embraces social media.

Sharing product data

How do we ensure that we share the same product information, including the same stories, across the ecosystem of product manufacturers, distributors and retailers?

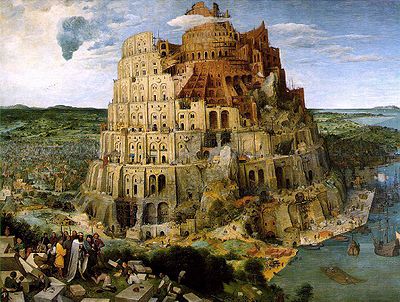

Recently I learned about a cloud service called Actualog aiming at doing exactly that with emphasis on the daunting task of sharing product data in an international environment with different measurement systems, languages, alphabets and script systems.

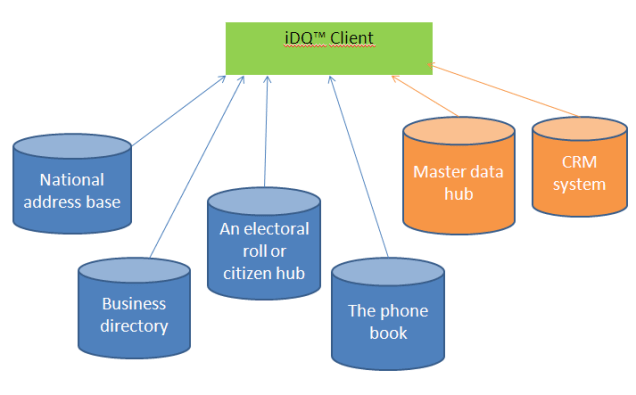

Actualog very much resembles the cloud service called iDQ™ I’m working with related to customer data integration.

Listening to big data

As discussed in the post Big Data and Multi Domain Master Data Management a prerequisite for getting sense out of analyzing social data (and other big data sources) is, that you not only have a consistent view of the product data related to products that you sell yourself, but also have a consistent view of competing products and how they relate to your products.

So, social PIM requires you to extend the volume of products handled by your product information management solution probably in alternate product hierarchies.