Right now I am overseeing the processing of yet a master data file with millions of records. In this case it is product master data also with customer master data kind of attributes, as we are working with a big pile of author names and related book titles.

The Big Buzz

Having such high numbers of master data records isn’t new at all and compared to the size of data collections we usually are talking about when using the trendy buzzword BigData, it’s nothing.

Data collections that qualify as big will usually be files with transactions.

However master data collections are increasing in volume and most transactions have keys referencing descriptions of the master entities involved in the transactions.

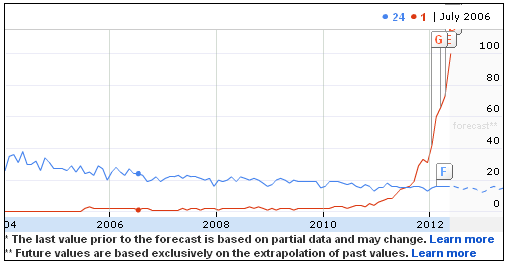

The growth of master data collections are also seen in collections of external reference data.

For example the Dun & Bradstreet Worldbase holding business entities from around the world has lately grown quickly from 100 million entities to near 200 millions entities. Most of the growth has been due to better coverage outside North America and Western Europe, with the BRIC countries coming in fast. A smaller world resulting in bigger data.

Also one of the BRICS, India, is on the way with a huge project for uniquely identifying and holding information about every citizen – that’s over a billion. The project is called Aadhaar.

When we extend such external registries also to social networking services by doing Social MDM, we are dealing with very fast growing number of profiles in Facebook, LinkedIn and other services.

Extreme Master Data

Gartner, the analyst firm, has a concept called “extreme data” that rightly points out, that it is not only about volume this “big data” thing; it is also about velocity and variety.

Gartner, the analyst firm, has a concept called “extreme data” that rightly points out, that it is not only about volume this “big data” thing; it is also about velocity and variety.

This is certainly true also for master data management (MDM) challenges.

Master data are exchanged between organizations more and more often in higher and higher volumes. Data quality focuses and maturity may probably not be the same within the exchanging parties. The velocity and volume makes it hard to rely on people centric solutions in these situations.

Add to that increasing variety in master data. The variety may be international variety as the world gets smaller and we have collections of master data embracing many languages and cultures. We also add more and more attributes each day as for example governments are releasing more data along with the open data trend and we generally include more and more attributes in order to make better and more informed decisions.

Variety is also an aspect of Multi-Domain MDM, a subject that according to Gartner (the analyst firm once again) is one of the Three Trends That Will Shape the Master Data Management Market.

56.085053

12.439756

Do we need a LinkedIn group for this and that? It’s always a question. There are already a lot of LinkedIn groups for Big Data and a lot of LinkedIn groups for Data Quality.

Do we need a LinkedIn group for this and that? It’s always a question. There are already a lot of LinkedIn groups for Big Data and a lot of LinkedIn groups for Data Quality.