Yesterday I attended an event called Big Data Forum 2012 held in London.

Big data seems to be yet a buzzing term with many definitions. Anyway, surely it is about datasets that are bigger (and more complex) than before.

The Olympics is Going to be Bigger

One session on the big data forum was about how BBC will use big data in covering the upcoming London Olympics on the BBC website.

One session on the big data forum was about how BBC will use big data in covering the upcoming London Olympics on the BBC website.

James Howard who I know as speckled_jim on Twitter told that the bulk of the content on the BBC Sports website is not produced by BBC. The data is sourced from external data providers and actually also the structure of the content is based on the external sources.

So for the Olympics there will be rich content about all the 10,000 athletes coming from all over the world. The BBC editorial stuff will be linked to this content of course emphasizing on the British athletes.

I guess that other broadcasting bodies and sports websites from all over the world will base the bulk of the content from the same sources and then more or less link targeted own produced content in the same way and with their look and feel.

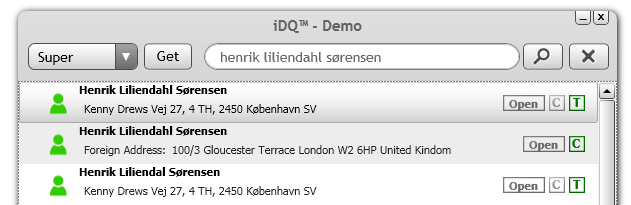

There are some data quality issues related to sourcing such data Jim told. For example you may have your own guideline for how to spell names in other script systems.

I have noticed exactly that issue in the news from major broadcasters. For example BBC spells the new Egyptian president Mursi while CNN says his name is Morsi.

Bigger Data in Party Master Data Management

The postal validation firm Postcode Anywhere recently had a blog post called Big Data – What’s the Big Deal?

The post has the well known sentiment that you may use your resources better by addressing data quality in “small data” rather than fighting with big data and that getting valid addresses in your party master data is a very good place to start.

I can’t agree more about getting valid addresses.

However I also see some opportunities in sharing bigger datasets for valid addresses. For example:

- The reference dataset for UK addresses typically based on the Royal Mail Postal Address File (PAF) is not that big. But the reference dataset for addresses from all over the world is bigger and more complex. And along with increasing globalization we need valid addresses from all over the world.

- Rich address reference data will be more and more available. The UK PAF file is not that big. The AddressBase from Ordnance Survey in the UK is bigger and more complex. So are similar location reference data with more information than basic postal attributes from all over world not at least when addressed together.

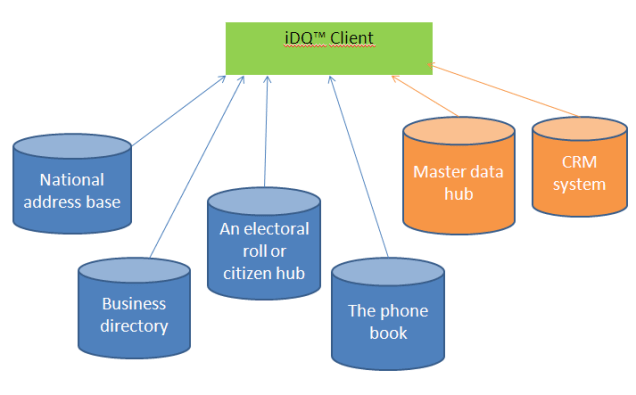

- A valid address based on address reference data only tells you if the address is valid, not if the addressee is (still) on the address. Therefore you often need to combine address reference data with business directories and consumer/citizen reference sources. That means bigger and more complex data as well.

Similar to how BBC is covering the Olympics my guess is that organizations will increasingly share bigger public address, business entity and consumer/citizen reference data and link private master data that you find more accurate (like the spelling example) along with essential data elements that better supports your way of doing business and makes you more competitive.

My recent post Mashing Up Big Reference Data and Internal Master Data describes a solution for linking bigger data within business processes in order to get a valid address and beyond.

56.085053

12.439756

Today I am visiting the Call Centre and Customer Management Expo 2012 in London and have a chance to learn about what’s going on in this area – and what happens to data quality and master data management.

Today I am visiting the Call Centre and Customer Management Expo 2012 in London and have a chance to learn about what’s going on in this area – and what happens to data quality and master data management.