Most data quality and master data management gurus, experts and practitioners agree that achieving a “single source of truth” is a nice term, but is not what data quality and master data management is really about as expressed by Michele Goetz in the post Master Data Management Does Not Equal The Single Source Of Truth.

Even among those people, including me, who thinks emphasis on real world alignment could help getting better data and information quality opposite to focusing on fitness for multiple different purposes of use, there is acknowledgement around that there is a “digital distance” between real world aligned data and the real world as explained by Jim Harris in the post Plato’s Data. Also, different public available reference data sources that should reflect the real world for the same entity are often in disagreement.

When working with improvement of data quality in party master data, which is the most frequent and common master data domain with issues, you encounter the same issues over and over again, like:

- Many organizations have a considerable overlap of real world entities who is a customer and a supplier at the same time. Expanding to other party roles this intersection is even bigger. This calls for a 360° Business Partner View.

- Most organizations divide activities into business-to-business (B2B) and business-to-consumer (B2C). But the great majority of business’s are small companies where business and private is a mixed case as told in the post So, how about SOHO homes.

- When doing B2C including membership administration in non-profit you often have a mix of single individuals and households in your core customer database as reported in the post Household Householding.

- As examined in the post Happy Uniqueness there is a lot of good fit for purpose of use reasons why customer and other party master data entities are deliberately duplicated within different applications.

- Lately doing social master data management (Social MDM) has emerged as the new leg in mastering data within multi-channel business. Embracing a wealth of digital identities will become yet a challenge in getting a single customer view and reaching for the impossible and not always desirable single source of truth.

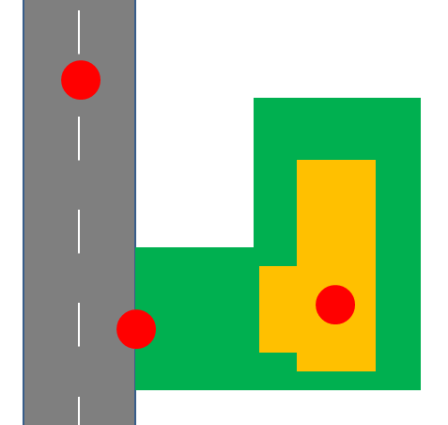

A way of getting some kind of structure into this possible, and actually very common, mess is to strive for a hierarchical single source of truth where the concept of a golden record is implemented as a model with golden relations between real world aligned external reference data and internal fit for purpose of use master data.

A way of getting some kind of structure into this possible, and actually very common, mess is to strive for a hierarchical single source of truth where the concept of a golden record is implemented as a model with golden relations between real world aligned external reference data and internal fit for purpose of use master data.

Right now I’m having an exciting time doing just that as described in the post Doing MDM in the Cloud.