In a recent post on the InfoTrellis blog we have the good old question in data matching about Deterministic Matching versus Probabilistic Matching.

The post has a good walk through on the topic and reaches this conclusion:

“So, which is better, Deterministic Matching or Probabilistic Matching? The question should actually be: ‘Which is better for you, for your specific needs?’ Your specific needs may even call for a combination of the two methodologies instead of going purely with one.”

On a side note the author of the post is MARIANITORRALBA. I had to use my combined probabilistic and deterministic in-word parsing supported and social media connected data matching capability to match this concatenated name with the Linked profile of an InfoTrellis employee called Marian Itorralba.

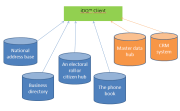

This little exercise brings me to an observation about data matching that is, that matching party master data, not at least when you do this for several purposes, ultimately is identity resolution as discussed in the post The New Year in Identity Resolution.

For that we need what could be called hierarchical data matching.

For that we need what could be called hierarchical data matching.

The reason we need hierarchical data matching is that more and more organizations are looking into master data management and then they realize that the classic name and address matching rules do not necessarily fit when party master data are going to be used for multiple purposes. What constitutes a duplicate in one context, like sending a direct mail, doesn’t necessary make a duplicate in another business function and vice versa. Duplicates come in hierarchies.

One example is a household. You probably don’t want to send two sets of the same material to a household, but you might want to engage in a 1-to-1 dialogue with the individual members. Another example is that you might do some very different kinds of business with the same legal entity. Financial risk management is the same, but different sales or purchase processes may require very different views.

This matter is discussed in the post and not at least the comments of the post called Hierarchical Data Matching.

56.085053

12.439756