A recent discussion on the LinkedIn Multi-Domain MDM group is about vendor / supplier portals as a part of Product Information Management implementations.

A supplier portal (or vendor portal if you like) is usually an extension to a Product Information Management (PIM) solution. The idea is that the suppliers of products, and thus providers of product information, to you as a downstream participant (distributor or retailer) in a supply chain, can upload their product information into your PIM solution and thus relieving you of doing that. This process usually replace the work of receiving spreadsheets from suppliers in the many situations where data pools are not relevant.

In my opinion and experience, this is a flawed concept, because it is hostile to the supplier. The supplier will have hundreds of downstream receivers of products and thus product information. If all of them introduced their own supplier portal, they will have to learn and maintain hundreds of them. Only if you are bigger than your supplier is and is a substantial part of their business, they will go with you.

Another concept, which is the opposite, is also emerging. This is manufacturers and upstream distributors establishing PIM customer portals, where suppliers can fetch product information. This concept is in my eyes flawed exactly the opposite way.

Another concept, which is the opposite, is also emerging. This is manufacturers and upstream distributors establishing PIM customer portals, where suppliers can fetch product information. This concept is in my eyes flawed exactly the opposite way.

And then let us imagine that every provider of product information had their PIM customer portal and every receiver had their PIM supplier portal. Then no data would flow at all.

What is your opinion and experience?

All these solutions constitutes one of the leading Multi-Domain MDM offerings on the market – if not the leading. We will be wiser on that question when Gartner (the analyst firm) makes their first Multi-Domain MDM Magic Quadrant later this year as reported in the post

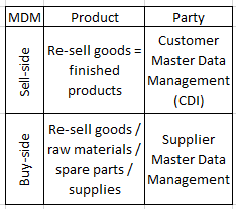

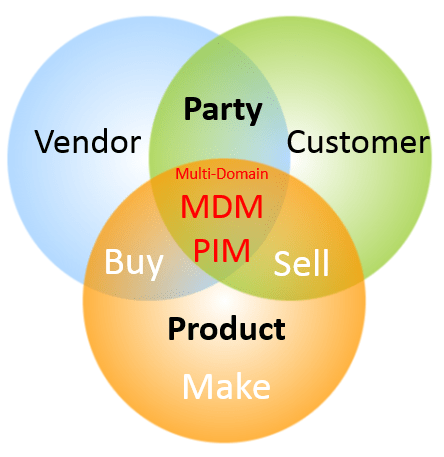

All these solutions constitutes one of the leading Multi-Domain MDM offerings on the market – if not the leading. We will be wiser on that question when Gartner (the analyst firm) makes their first Multi-Domain MDM Magic Quadrant later this year as reported in the post  This is a classic consideration at the heart of multi-domain MDM. As I see it, and what I advise my clients to do, is to have a common party (or business partner) structure for identification, names, addresses and contact data. This should be supported by data quality capabilities strongly build on external reference data (third party data). Besides this common structure, there should be specific structures for customer, vendor/supplier and other party roles.

This is a classic consideration at the heart of multi-domain MDM. As I see it, and what I advise my clients to do, is to have a common party (or business partner) structure for identification, names, addresses and contact data. This should be supported by data quality capabilities strongly build on external reference data (third party data). Besides this common structure, there should be specific structures for customer, vendor/supplier and other party roles. Using an inheritance approach is a better way. This approach is for product master data based on having a mature hierarchy management in place. When creating a new product you place your product in the hierarchy where it will inherit the attributes common for products on the same branch of the hierarchy and leave it for you to fill in the exact attributes that is specific for the new product. If a new product requires a new branch in the hierarchy, you are forced to think about the common attributes for this branch through.

Using an inheritance approach is a better way. This approach is for product master data based on having a mature hierarchy management in place. When creating a new product you place your product in the hierarchy where it will inherit the attributes common for products on the same branch of the hierarchy and leave it for you to fill in the exact attributes that is specific for the new product. If a new product requires a new branch in the hierarchy, you are forced to think about the common attributes for this branch through.

So this year it may be the time to have a closer look at big data quality, Santa style, meaning how we can imagine Santa Claus is joining the raise of big data while observing that exploiting data, big or small, is only going to add real value if you believe in data quality. Ho ho ho.

So this year it may be the time to have a closer look at big data quality, Santa style, meaning how we can imagine Santa Claus is joining the raise of big data while observing that exploiting data, big or small, is only going to add real value if you believe in data quality. Ho ho ho. But, have any new trends showed up in the past years?

But, have any new trends showed up in the past years?