Previous years close to Christmas posts on this blog has been about Multi-Domain MDM, Santa Style and Data Governance, Santa Style.

So this year it may be the time to have a closer look at big data quality, Santa style, meaning how we can imagine Santa Claus is joining the raise of big data while observing that exploiting data, big or small, is only going to add real value if you believe in data quality. Ho ho ho.

So this year it may be the time to have a closer look at big data quality, Santa style, meaning how we can imagine Santa Claus is joining the raise of big data while observing that exploiting data, big or small, is only going to add real value if you believe in data quality. Ho ho ho.

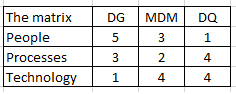

At the Santa Claus organization they have figured out, that there is a close connection between excellence in working with big data and excellence in multi-domain Master Data Management (MDM) and data governance.

Here are some of the findings in the big data paper that the Chief Data Elf just signed off:

- The feasibility of the new algorithms for naughty or nice marking using social media listening combined with our historical records is heavily dependent on unique, accurate and timely boys and girls master data. The party data governance elf gathering will be accountable for any nasty and noisy issues.

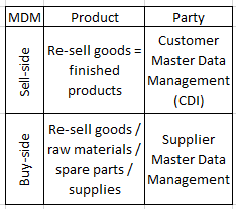

- Implementation of the automated present buying service based on fuzzy matching between our supplier self-service based multi-lingual product catalogue and the wish list data lake must be done in a phased schedule. The product data governance elf committee are responsible for avoiding any false positives (wrong present incidents) and decreasing the number of false negatives (someone not getting what could be purchaed within the budget).

- Last year we had and an 12.25 % overspend on reindeers due to incorrect and missing chimney positions. This year the reliance on crowdsourced positions will be better balanced with utilizing open government property data where possible. The location data governance elves will consult with the elves living on the roof at each head of state in order make them release more and better quality of any such data (the Gangnam Project).

An important part of implementing Master Data Management (MDM) is to capture the business rules that exists within the implementing organization and build those rules into the solution. In addition, and maybe even more important, is the quest of crafting new business rules that helps making master data being of more value to the implementing organization.

An important part of implementing Master Data Management (MDM) is to capture the business rules that exists within the implementing organization and build those rules into the solution. In addition, and maybe even more important, is the quest of crafting new business rules that helps making master data being of more value to the implementing organization.

While the innovators and early adopters are fighting with big data quality the late majority are still trying get the heads around how to manage small data. And that is a good thing, because you cannot utilize big data without solving small data quality problems not at least around master data as told in the post

While the innovators and early adopters are fighting with big data quality the late majority are still trying get the heads around how to manage small data. And that is a good thing, because you cannot utilize big data without solving small data quality problems not at least around master data as told in the post  Solving data quality problems is not just about fixing data. It is very much also about fixing the structures around data as explained in a post, featuring the pope, called

Solving data quality problems is not just about fixing data. It is very much also about fixing the structures around data as explained in a post, featuring the pope, called  A common roadblock on the way to solving data quality issues is that things that what are everybody’s problem tends to be no ones problem. Implementing a data governance programme is evolving as the answer to that conundrum. As many things in life data governance is about to think big and start small as told in the post

A common roadblock on the way to solving data quality issues is that things that what are everybody’s problem tends to be no ones problem. Implementing a data governance programme is evolving as the answer to that conundrum. As many things in life data governance is about to think big and start small as told in the post  Data governance revolves a lot around peoples roles and there are also some specific roles within data governance. Data owners have been known for a long time, data stewards have been around some time and now we also see Chief Data Officers emerge as examined in the post

Data governance revolves a lot around peoples roles and there are also some specific roles within data governance. Data owners have been known for a long time, data stewards have been around some time and now we also see Chief Data Officers emerge as examined in the post

The growth of available data to support your business is a challenge today. Your competitors take advantage of new data sources and better exploitation of known data sources while you are sleeping. New competitors emerge with business ideas based on new ways of using data.

The growth of available data to support your business is a challenge today. Your competitors take advantage of new data sources and better exploitation of known data sources while you are sleeping. New competitors emerge with business ideas based on new ways of using data.