Now, this blog post title might sound silly, as we generally consider true positives to be the cream of data matching as it means that we have found a match between two data records that reflects the same real world entity and it has been confirmed, that this is true and based on that we can eliminate a harmful and costly duplicate in our records.

Why this isn’t still an optimal situation is that the duplicate shouldn’t have entered our data store in the first place. Avoiding duplicates up front is by far the best option.

So, how do you do that?

You may aim for low latency duplicate prevention by catching the duplicates in (near) real-time by having duplicate checks after records have been captured but before they are committed in whatever is the data store for the entities in question. But still, this is actually also about finding true positives and at the same time to be aware of false positives.

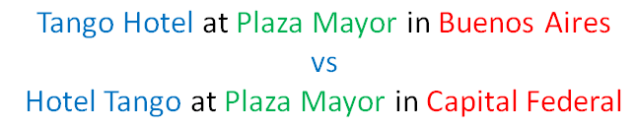

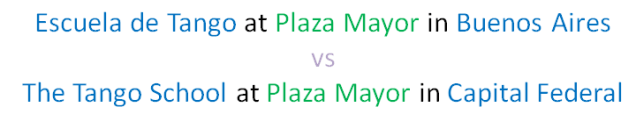

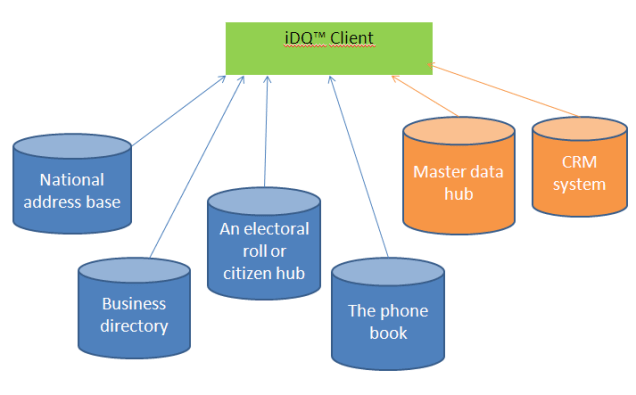

The best way is to aim for instant data quality. That is, instead of entering data for the (supposed) new records, you are able to pick the data from data stores already available presumably in the cloud through an error tolerant search that covers external data as well as data records already in the internal data store.

This is exactly such a solution I’m working with right now. And oh yes, it is exactly called instant Data Quality.