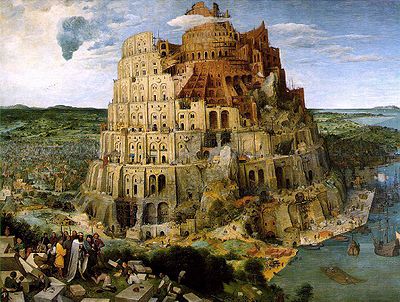

3 years ago one of the first blog posts on this blog was called The Tower of Babel.

This post was the first of many posts about multi-cultural challenges in data quality improvement. These challenges includes not only language variations but also different character sets reflecting different alphabets and script systems, naming traditions, address formats, measure units, privacy norms, government registration practice to name some of the ones I have experienced.

When organizations are working internationally it may be tempting to build a new Tower of Babel imposing the same language for metadata (probably English) and the same standards for names, addresses and other master data (probably the ones of the country where the head quarter is).

However, building such a high tower may end up the same way as the Tower of Babel known from the old religious tales.

Alternatively a mapping approach may be technically a bit more complex but much easier when it comes to change management.

The mapping approach is used in the Universal Postal Unions’ (UPU) attempt to make a “standard” for worldwide addresses. The UPU S42 standard is mentioned in the post Down the Street. The S42 standard does not impose the same way of writing on envelopes all over the world, but facilitates mapping the existing ways into a common tagging mapped to a common structure.

Building such a mapping based “standard” for addresses, and other master data with international diversity, in your organization may be a very good way to cope with balancing the need for standardization and the risks in change management including having trusted and actionable master data.

The principle of embracing and mapping international diversity is a core element in the service I’m currently working with. It’s not that the instant Data Quality service doesn’t stretch into the clouds. Certainly it is a cloud service pulling data quality from the cloud. It’s not that that it isn’t big. Certainly it is based on big reference data.