You may divide doing identity resolution into these categories:

You may divide doing identity resolution into these categories:

- Hard core identity check

- Light weight real world alignment

- Digital identity resolution

Hard Core Identity Check

Some business processes requires a solid identity check. This is usually the case for example for credit approval and employment enrolment. Identity check is also part of criminal investigation and fighting terrorism.

Services for identity checks vary from country to country because of different regulations and different availability of reference data.

An identity check usually involves the entity who is being checked.

Light Weight Real World Alignment

In data quality improvement and Master Data Management (MDM) you often include some form of identity resolution in order to have your data aligned with the real world. For example when evaluating the result of a data matching activity with names and addresses, you will perform a lightweight identity resolution which leads to marking the matched results as true or false positives.

Doing such kind of identity resolution usually doesn’t involve the entity being examined.

Digital Identity Resolution

Our existence has increasingly moved to the online world. As discussed in the post Addressing Digital Identity this means that we also will need means to include digital identity into traditional identity resolution.

There are of course discussions out there about how far digital identity resolution should be possible. For example real name policy enforcement in social networks is indeed a hot topic.

Future Trends

With regard to digital identity resolution the jury is still out. In my eyes we can’t avoid that the economic consequences of the rising social sphere will affect the demand for knowing who is out there. Also the opportunities in establishing identity via digital footprints will be exploited.

My guess is that the distinction between hard core identity check and real world alignment in data quality improvement and MDM will disappear as reference data will become more available and the price of reference data will go down.

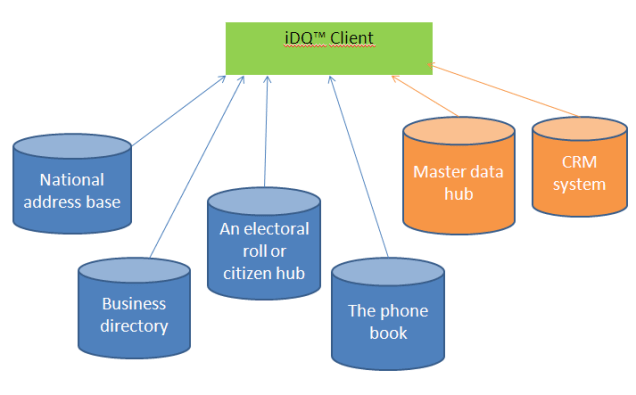

That’s why I’m right now working with a solution (www.instantdq.com) that combines identity check features and data universe into master data management with the possibility of adding digital identity into the mix.