When talking master data management we usually divide the discipline into domains, where the two most prominent domains are:

- Customer, or rather party, master data management

- Product, sometimes also named “things”, master data management

One the most frequent mentioned additional domains are locations.

But despite that locations are all around we seldom see a business initiative aimed at enterprise wide location data management under a slogan of having a 360 degree view of locations. Most often locations are seen as a subset of either the party master data or in some cases the product master data.

Industry diversity

The need for having locations as focus area varies between industries.

In some industries like public transit, where I have been working a lot, locations are implicit in the delivered services. Travel and hospitality is another example of a tight connection between the product and a location. Also some insurance products have a location element. And do I have to mention real estate: Location, Location, Location.

In other industries the location has a more moderate relation to the product domain. There may be some considerations around plant and warehouse locations, but that’s usually not high volume and complex stuff.

Locations as a main factor in exploiting demographic stereotypes are important in retailing and other business-to-consumer (B2C) activities. When doing B2C you often want to see your customer as the household where the location is a main, but treacherous, factor in doing so. We had a discussion on the house-holding dilemma in the LinkedIn Data Matching group recently.

Whenever you, or a partner of yours, are delivering physical goods or a physical letter of any kind to a customer, it’s crucial to have high quality location master data. The impact of not having that is of course dependent on the volume of deliveries.

Globalization

If you ask me about London, I will instinctively think about the London in England. But there is a pretty big London in Canada too, that would be top of mind to other people. And there are other smaller Londons around the world.

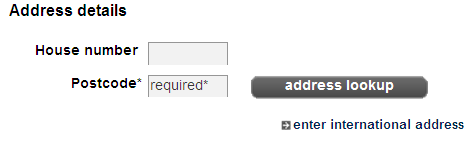

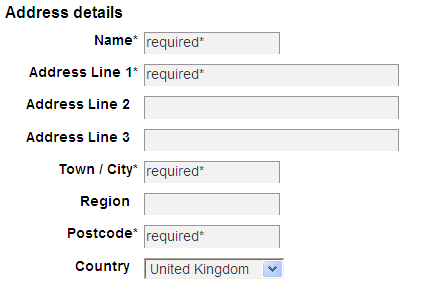

Master data with location attributes does increasingly come in populations covering more than one country. It’s not that ambiguous place names don’t exist in single country sets. Ambiguous place names were the main driver behind that many countries have a postal code system. However the British, and the Canadians, invented a system including letters opposite to most other systems only having numbers typically with an embedded geographic hierarchy.

Apart from the different standards used around the possibilities for exploiting external reference data is very different concerning data quality dimensions as timeliness, consistency, completeness, conformity – and price.

Handling location data from many countries at the same time ruins many best practices of handling location data that have worked for handling location for a single country.

Geocoding

Instead of identifying locations in a textual way by having country codes, state/province abbreviations, postal codes and/or city names, street names and types or blocks and house numbers and names it has become increasingly popular to use geocoding as supplement or even alternative.

There are different types of geocodes out there suitable for different purposes. Examples are:

- Latitude and longitude picturing a round world,

- UTM X,Y coordinates picturing peels of the world

- WGS84 X, Y coordinates picturing a world as flat as your computer screen.

While geocoding has a lot to offer in identifying and global standardization we of course has a gap between geocodes and everyday language. If you want to learn more then come and visit me at N55’’38’47, E12’’32’58.

56.085053

12.439756

I guess it is good business for Virgin Train not to be prepared for a little bit of snow when operating in England thus making the first sign of the white fluffy stuff from above being “severe weather conditions”.

I guess it is good business for Virgin Train not to be prepared for a little bit of snow when operating in England thus making the first sign of the white fluffy stuff from above being “severe weather conditions”.