When working with data matching you often finds that there basically is a bright view and a dark view.

Traditional data matching as seen in most data quality tools and master data management solutions is the bright view: Being about finding duplicates and making a “single customer view”. Identity resolution is the dark view: Preventing fraud and catching criminals, terrorists and other villains.

These two poles were discussed in a blog post and the following comments last year. The post was called What is Identity Resolution?

While deduplication and identity resolution may be treated as polar opposites and seemingly contrary disciplines they are in my eyes interconnected and interdependent. Yin and Yang Data Quality.

While deduplication and identity resolution may be treated as polar opposites and seemingly contrary disciplines they are in my eyes interconnected and interdependent. Yin and Yang Data Quality.

At the MDM Summit in London last month one session was about the Golden Nominal, Creating a Single Record View. Here Corinne Brazier, Force Records Manager at the West Midlands Police in the UK told about how a traditional data quality tool with some matching capabilities was used to deal with “customers” who don’t want to be recognized.

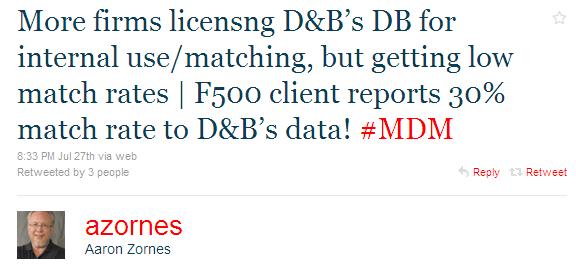

In the post How to Avoid Losing 5 Billion Euros it was examined how both traditional data matching tools and identity screening services can be used to prevent and discover fraudulent behavior.

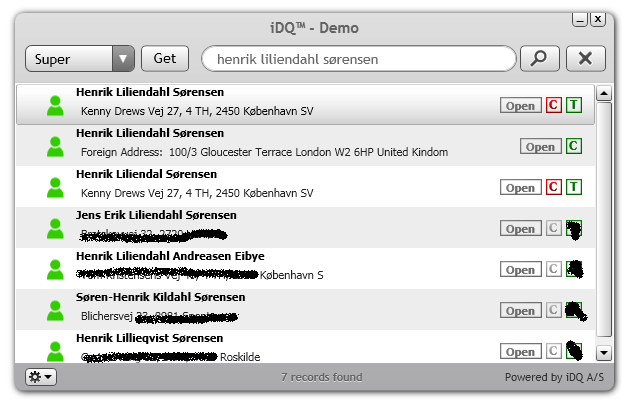

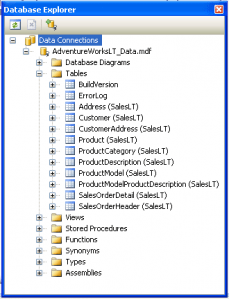

Deduplication becomes better when some element of identity resolution is added to the process. That includes embracing big reference data in the process. Knowing what is known in available sources about the addresses that is being matched helps. Knowing what is known in business directories about companies helps. Knowing what is known in appropriate citizen directories when deduping records holding data about individuals helps.

Identity Resolution techniques is based on the same data matching algorithms we use for deduplication. Here for example a fuzzy search technology helps a lot compared to using wildcards. And of course the same sources as mentioned above are a key to the resolution.

Right now I’m dipping deep into the world of big reference data as address directories, business directories, citizen directories and the next big thing being social network profiles. I have no doubt about that deduplication and identity resolution will be more yinyang than yin and yang in the future.