One of the big trends in data quality improvement is going from downstream cleansing to upstream prevention. So let’s talk about Amazon. No, not the online (book)store, but the river. Also as I am a bit tired about that almost any mention of innovative IT is about that eShop.

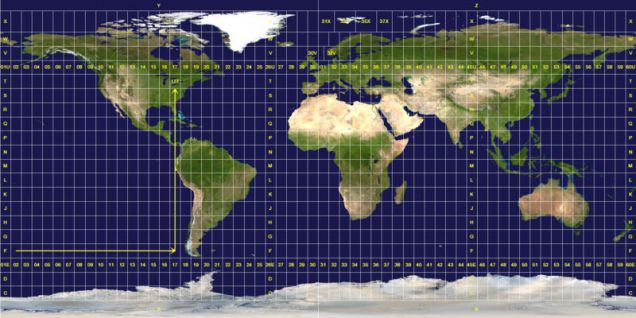

A map showing the Amazon River drainage basin may reveal what may go to be a huge challenge in going upstream and solve the data quality issues at the source: There may be a lot of sources. Okay, the Amazon is the world’s largest river (because it carries more water to the sea than any other river), so this may be a picture of the data streams in a very large organization. But even more modest organizations have many sources of data as more modest rivers also have several sources.

A map showing the Amazon River drainage basin may reveal what may go to be a huge challenge in going upstream and solve the data quality issues at the source: There may be a lot of sources. Okay, the Amazon is the world’s largest river (because it carries more water to the sea than any other river), so this may be a picture of the data streams in a very large organization. But even more modest organizations have many sources of data as more modest rivers also have several sources.

By the way: The Amazon River also shares a source with the Orinoco River through the natural Casiquiare Canal, just as many organizations also shares sources of data.

Some sources are not so easy to reach as the most distant source of the Amazon being a glacial stream on a snowcapped 5,597 m (18,363 ft) peak called Nevado Mismi in the Peruvian Andes.

Now, as I promised that the trend on this blog should be about positivity and success in data quality improvement I will not dwell at the amount of work in going upstream and prevent dirty data from every source.

Now, as I promised that the trend on this blog should be about positivity and success in data quality improvement I will not dwell at the amount of work in going upstream and prevent dirty data from every source.

I say: Go to the clouds. The clouds are the sources of the water in the river. Also I think that cloud services will help a lot in improving data quality in a more easy way as explained in a recent post called Data Quality from the Cloud.

Finally, the clouds over the Amazon River sources are made from water evaporated from the Amazon and a lot of other waters as part of the water cycle. In the same way data has a cycle of being derived as information and created in a new form as a result of the actions made from using the information.

I think data quality work in the future will embrace the full data cycle: Downstream cleansing, upstream prevention and linking in the cloud.