When working with selling data quality software tools and services I have often used external sources for business contact data and not at least when working with data matching and party master data management implementations in business-to-business (B2B) environments I have seen uploads of these data in CRM sources.

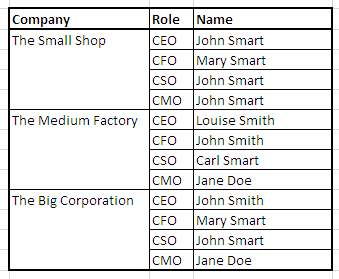

A typical external source for B2B contact data will look like this:

Some of the issues with such data are:

- Some of the contact data names may be the same real world individual as told in the post Echoes in the Database

- People change jobs all the time. The external lists will typically have entries verified some time ago and when you upload to your own databases, data will quickly become useless do to data decay.

- When working with large companies in customer and other business partner roles you often won’t interact with the top level people, but people in lower levels not reflected in such external sources.

The rise of social networks has presented new opportunities for overcoming these challenges as examined in a post (written some years ago) called Who is working where doing what?

However, I haven’t seen so many attempts yet to automate and include working with social network profiles in business processes. Surely there are technical issues and not at least privacy considerations in doing so as discussed in the post Sharing Social Master Data.

Right now we have a discussion going on in the LinkedIn Social MDM group about examples of connecting social network profiles and master data management. Please add your experiences in the group here – and join if you aren’t already a member.