No, I am not going to continue some of the recent fine debates on who within a given company is data owner, accountable and responsible for data quality.

My point today is that many views on data ownership, the importance of upstream prevention and fitness for purpose of use in a business context is based on an assumption that the data in a given company is entered by that company, maintained by that company and consumed by that company.

This is in the business world today not true in many cases.

Examples:

Direct marketing campaigns

Making a direct marketing campaign and sending out catalogues is often an eye opener for the quality of data in your customer and prospect master files. But such things are very often outsourced.

Your company extracts a file with say 100.000 names and addresses from your databases and you pay a professional service provider a fee for each row for doing the rest of the job.

Your company extracts a file with say 100.000 names and addresses from your databases and you pay a professional service provider a fee for each row for doing the rest of the job.

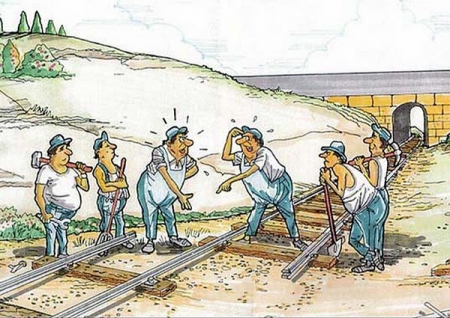

Now the service provider could do you the kind favour of carefully deduplicating the file, eliminate the 5.000 purge candidates and bring you the pleasant message that the bill will be reduced by 5 %.

Yes I know, some service providers actually includes deduplication in their offerings. And yes, I know, they are not always that interested in using an advanced solution for that.

I see the business context here – but unfortunately it’s not your business.

Factoring

Sending out invoices is often a good test on how well customer master data is entered and maintained. But again, using an outsourced service for that like factoring is becoming more common.

Your company hands over the name and address, receives the most of the money, and the data is out of sight.

Now the factoring service provider has a pretty good interest in assuring the quality of the data and aligning the data with a real world entity.

Unfortunately this can not be done upstream, it’s a downstream batch process probably with no signalling back to the source.

Customer self service

Today data entry clerks are rapidly being replaced as the customer is doing all the work themselves on the internet. Maybe the form is provided by you, maybe – as often with hotel reservations – the form is provided by a service provider.

So here you basically either have to extend your data governance all the way to your customers living room or office or in some degree (fortunately?) accept that the customer owns the data.

55.580294

12.282991

Now India jumps the bandwagon and starts assigning a unique ID to the 1.2 billion people living in India. As I understand it the project has just been named Aadhar (or Aadhaar). Google translate tells me this word (आधार) means base or root – please correct if anyone knows better.

Now India jumps the bandwagon and starts assigning a unique ID to the 1.2 billion people living in India. As I understand it the project has just been named Aadhar (or Aadhaar). Google translate tells me this word (आधार) means base or root – please correct if anyone knows better.