In the LinkedIn Multi-Domain MDM group we have an ongoing discussion about why you need a master data hub when you already got some workflow, UI and a database.

I have been involved in several master data quality improvement programs without having the opportunity of storing the results in a genuine MDM solution, for example as described in the post Lean MDM. And of course this may very well result in a success story.

I have been involved in several master data quality improvement programs without having the opportunity of storing the results in a genuine MDM solution, for example as described in the post Lean MDM. And of course this may very well result in a success story.

However there are some architectural reasons why many more organizations than those who are using a MDM hub today may find benefits in sooner or later having a Master Data hub.

Hierarchical Completeness

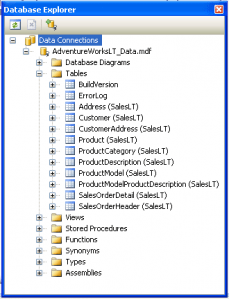

If we start with product master data the main issue with storing product master data is the diversity in the requirements for which attributes is needed and when they are needed dependent on the categorization of the products involved.

Typical you will have hundreds or thousands of different attributes where some are crucial for one kind of product and absolutely ridiculous for another kind of product.

Modeling a single product table with thousands of attributes is not a good database practice and pre-modeling tables for each thought categorization is very inflexible.

Setting up mandatory fields on database level for product master data tables is asking for data quality issues as you can’t miss either over-killing or under-killing.

Also product master data entities are seldom created in one single insertion, but is inserted and updated by several different employees each responsible for a set of attributes until it is ready to be approved as a whole.

A master data hub, not at least those born in the product domain, is built for those realities.

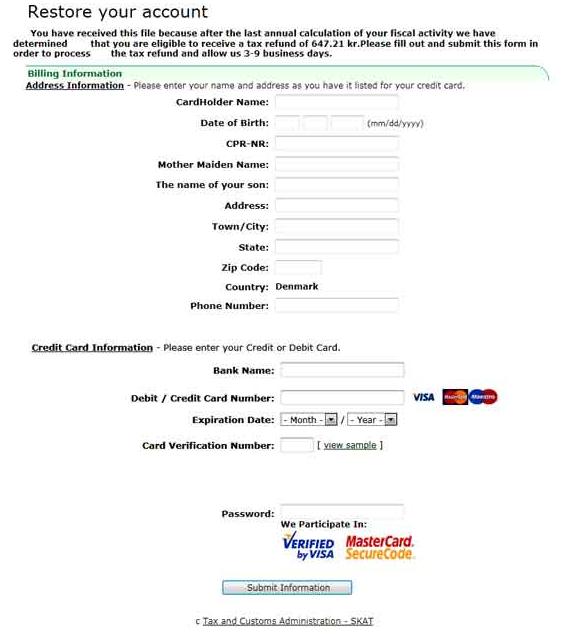

The party domain has hierarchical issues too. One example will be if a state/province is mandatory on an address, which is dependent on the country in question.

Single Business Partner View

I like the term “single business partner view” as a higher vision for the more common “single customer view”, as we have the same architectural requirements for supplier master data, employee master data and other master data concerning business partners as we have for the of course extremely important customer master data.

The uniqueness dimension of data quality has a really hard time in common database managers. Having duplicate customer, supplier and employee master data records is the most frequent data quality issue around.

In this sense, a duplicate party is not a record with accurately the same fields filled and with accurate the same values spelled accurately the same as a database will see it. A duplicate is one record reflecting the same real world entity as another record and a duplicate group is more records reflecting the same real world entity.

Even though some database managers have fuzzy capabilities they are still very inadequate in finding these duplicates based on including several attributes at one time and not at least finding duplicate groups.

Finding duplicates when inserting supposed new entities into your customer list and other party master data containers is only the first challenge concerning uniqueness. Next you have to solve the so called survivorship questions being what values will survive unavoidable differences.

Finally the results to be stored may have several constructing outcomes. Maybe a new insertion must be split into two entities belonging to two different hierarchy levels in your party master data universe.

A master data hub will have the capabilities to solve this complexity, some for customer master data only, some also for supplier master data combined with similar challenges with product master data and eventually also other party master data.

Domain Real World Awareness

Building hierarchies, filling incomplete attributes and consolidating duplicates and other forms of real world alignment is most often fulfilled by including external reference data.

There are many sources available for party master as address directories, business directories and citizen information dependent on countries in question.

With product master data global data synchronization involving common product identifiers and product classifications is becoming very important when doing business the lean way.

Master data hubs knows these sources of external reference data so you, once again, don’t have to reinvent the wheel.